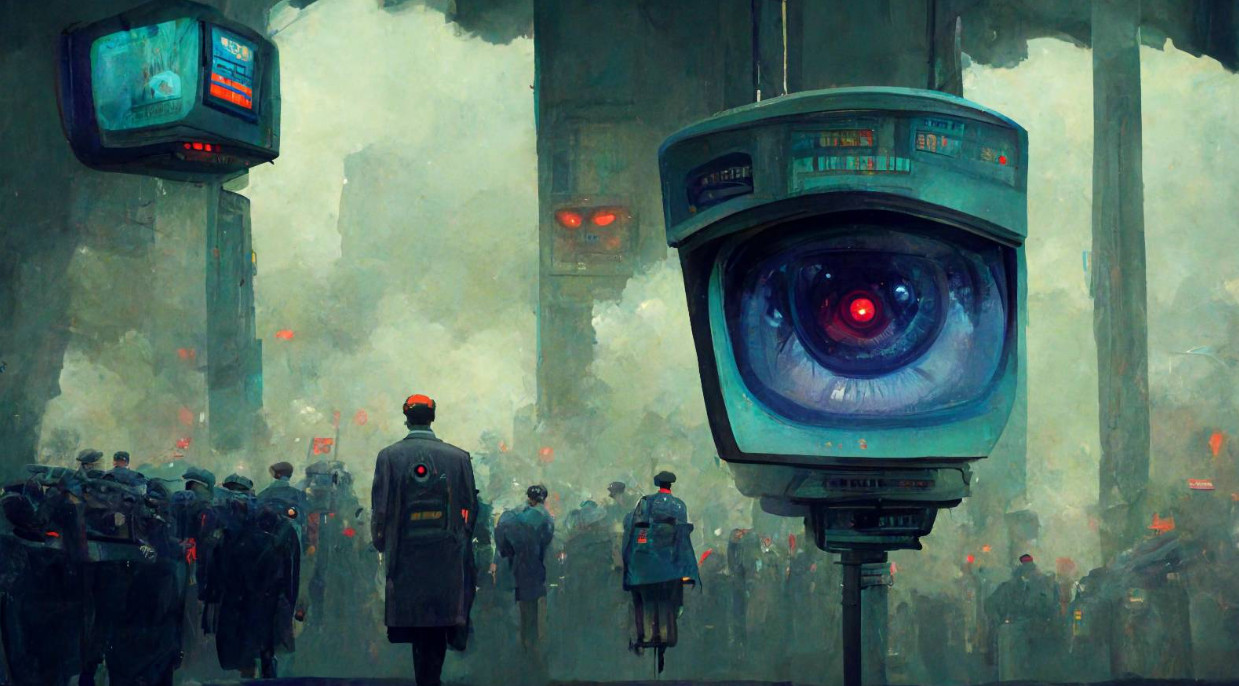

A new media regulator – a safe haven or Big Brother?

Janet Wilson, Contributing Writer

5 June 2023

In George Orwell’s dystopian novel 1984, the citizens of Oceania are watched everywhere they go through telescreens by the ruling Party’s omniscient leader Big Brother. To further tighten its grip on its denizens, and to prevent political rebellion by eliminating all words related to it, the Party forces its citizens to learn Newspeak, an invented language.

Which begs the question; is New Zealand about to get its own Newspeak moment with the release of the Safer Online Services and Media Platforms discussion document by the Department of Internal Affair’s Content Regulatory Review?

If those names sound Orwellian, then the content behind this proposal is even more so. And despite its aim of providing safer spaces online for everyone and the fact that it’s primarily targeting previously unregulated social media, it seeks Big Brother-like to encompass all forms of media including mainstream, social media, films and gaming.

The proposal has been constructed with the terms ‘safe spaces’ and ‘harm minimisation’ firmly in mind. Those ideas are laudable but, as Radio New Zealand’s CEO Paul Thompson said, “The definition of harm is that it is inherently a subjective assessment, whereas any standards regime or code of practice needs an objective set of criteria against which any content can be evaluated.” Which calls into question who gets to decide what’s safe and what is harm.

Controlling all these reforms would be a new regulator whose Board would be appointed by Government but would be “fully independent of Ministers”. Media organisations that had either an audience of 100,000 or more annually or 25,000 annual account holders would have to provide a voluntary code of practice which the regulator would approve “or send them back to industry for necessary changes to bring them into compliance with the new framework.” Which makes for more Orwellian thinking because the voluntary part is, in reality, involuntary.

The discussion document is at pains to say that traditional media are likely to welcome the changes. It trumpets that the new regulator would have “no powers over the editorial decisions of media platforms” but it does have the power to takedown content and can penalise for “serious failures of compliance.”

Ok, let’s get this right; a powerful new regulator, without political accountability, gets to decide what is ‘safe’, even if it’s still legal, over what traditional mainstream media and social media produces (as well as film and gaming)? Sound like 1984, much?

The discussion document itself provides a fascinating insight into how consumers could access the new system by presenting the example of disordered eating which targets young people being shared online. Once it’s identified as a risk the document’s authors claim users could opt out of seeing it, the content’s producers would be warned about disordered eating impacts and disordered eating could be integrated into school curriculums.

Which potentially makes this new regulator New Zealand’s greatest moral policeman in a country that’s full of the morally pugnacious. Because here’s the rub – the regulator can’t change what is or isn’t legal online, but it can insist that content be removed because it’s ‘unsafe’ or that it ‘harms’ someone. Which is an assault on your and my ability to fully express ourselves no matter how bumblingly or inelegantly it’s communicated. This is what Jonathan Ayling of the Free Speech Union calls ‘the lawful but awful’ category of free speech.

Instead of a monolithic bureaucracy monitoring what’s said everywhere, a rival for Newspeak if ever there was one, why not instead flex the resilience muscle and encourage more speech, not less? If hate-speech laws enacted around the world prove anything, and these reforms are a back-door way of just that, it’s that it doesn’t actually improve how we behave towards each other.

Instead, like 1984 it breeds a world where paranoia and cynical distrust reign supreme. Where it’s enough for someone to complain that they don’t feel ‘safe’ which will bring that content provider under the auspices of the regulator who’ll then examine the provider’s safety plan.

If the safety plan is found wanting, for whatever reason, if you are deemed to have offended then watch out, the weaponisation of the complaints process is complete. The regulator has the power to take down your content and fine you.

Mr Orwell couldn’t have designed a more labyrinthine system that robs a population of their greatest power, the ability to express themselves, and impose bureaucratically sanctioned Newspeak as a way of expunging thought-crime (illegal thoughts) and individuality.

There’s no denying that online content causes the proliferation of harmful hate. But rather than suppressing such speech, the answer lies in more speech – the cleansing disinfectant of counter-speech.

If this new faceless Ministry of Thought worries you, then get cracking. You have until July 31st to make a submission on the consultation document.

Janet Wilson is a freelance journalist and communications consultant. To receive pieces like this in your inbox subscribe to our newsletter.